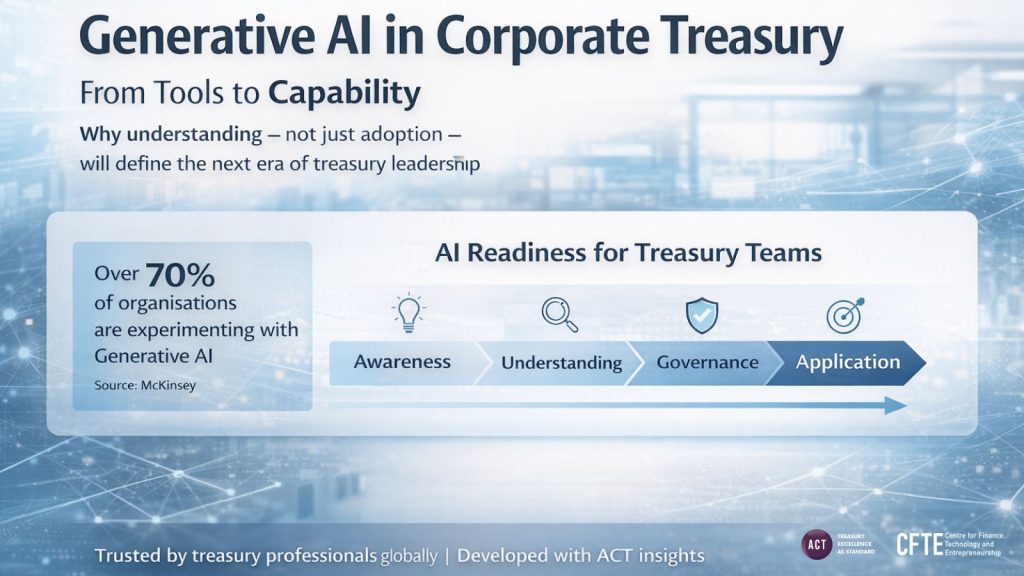

Generative AI is already finding its way into corporate treasury through forecasting tools, reporting workflows, and analytical platforms. According to McKinsey, over 70% of organisations globally are experimenting with generative AI, including within finance and risk functions. Yet only a small proportion have embedded it meaningfully into core decision-making.

In treasury, this gap matters. Decisions here affect liquidity, risk exposure, governance, and trust across the organisation.

From automation to judgement

For many years, AI in treasury focused on automation:

- Rules-based forecasting

- Reconciliation

- Exception detection

Generative AI represents a different shift. These systems interpret information, synthesise large datasets, and generate narratives and scenarios that influence human judgement rather than simply execute predefined rules.

This expands what treasury teams can do — but also introduces new risks.

Why oversight matters more, not less

Generative AI does not understand context or accountability in the way treasury professionals do. It operates on probabilistic patterns, which means it can produce outputs that appear confident but are incomplete or misleading.

The Bank for International Settlements (BIS) has repeatedly highlighted that as AI becomes more embedded in financial decision-making, human oversight becomes more important, not less. In treasury, speed without understanding can quickly translate into risk.

Adoption is moving faster than capability

Many organisations are deploying AI tools faster than they are building the capability to use them well.

According to PwC, fewer than 30% of finance leaders believe their teams fully understand the implications of AI for risk management, controls, and governance, despite widespread adoption.

Treasury teams are therefore left navigating questions such as:

When should AI outputs be trusted, and when should they be challenged?

How do models handle uncertainty, edge cases, or poor-quality data?

What are regulators expecting treasury teams to understand about AI use?

Who remains accountable when decisions are AI-assisted?

As Janet Legge, Deputy Chief Executive of the Association of Corporate Treasurers (ACT), has noted, AI is transforming everyday treasury work — making upskilling an operational necessity.

What AI capability actually means for treasury

Being “interested in AI” is no longer enough. For treasury teams, real capability means the ability to:

Understand how generative AI produces outputs

Distinguish insight from hallucination

Apply AI to treasury-specific use cases, not generic finance examples

Navigate governance, auditability, and regulatory expectations

Research from the OECD and World Economic Forum consistently shows that the biggest barrier to effective AI adoption is not technology, but skills, understanding, and organisational readiness.

A structured response to a real gap

The Generative AI in Corporate Treasury programme was designed to address this exact challenge.

Developed as part of CFTE’s AI in Finance Academy, the programme combines:

Short, focused learning designed for working professionals

Real-world treasury use cases, including scenarios where AI can fail

Insights from senior practitioners at organisations such as Deutsche Bank, BAT, NatWest, and Pearson

An industry-recognised certificate issued by CFTE and the ACT

Delivered in 15 minutes per day over four weeks, the programme fits into real treasury workflows while building lasting understanding rather than surface-level familiarity.

The objective is not tool proficiency.

It is decision confidence.

AI adoption in finance will continue regardless of readiness. The World Economic Forum estimates that AI will reshape the majority of finance roles over the next decade by changing how decisions are made and supported.

In this environment, the differentiator will not be access to technology, but the ability to use it with clarity, judgement, and accountability.

Generative AI is already part of corporate treasury. The more important question is whether it is being applied deliberately, with structure and understanding, or informally, without clear guardrails.