The latest AI in Finance Academy webinar, opened to the Global Women in AI community, brought together participants from across the world to explore how leaders can avoid decision-making traps when using AI and generative AI.

Led by Dr. Laura Aranas, Chief Risk Officer at Alliance Bank and Global Women in AI ambassador, the session walked through real-world cases from finance, HR and markets to show how AI can both unlock value and magnify risks such as discrimination, over-optimistic forecasts and flawed automation. Drawing on neuroscience and risk management, Dr. Aranas explained how our brains actually make decisions (System 1 vs System 2), why GenAI’s convincing narratives can amplify human biases, and how leaders can respond with practical governance.

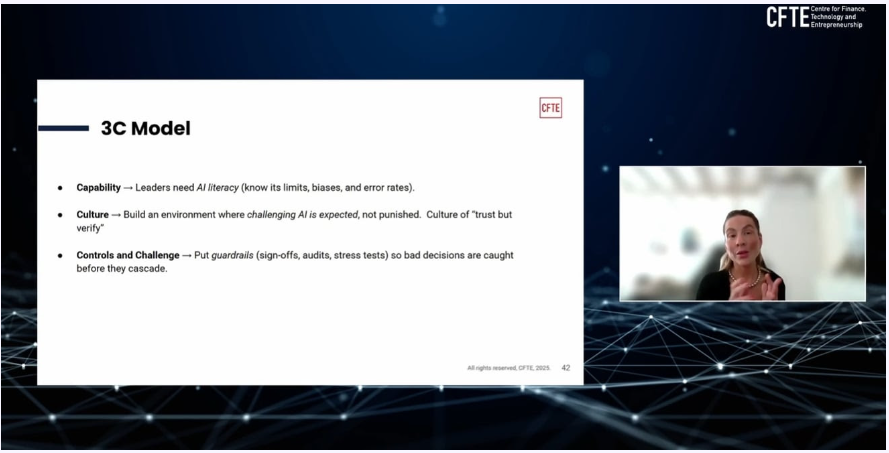

She closed with a “3C” framework – Capability, Culture and Control/Challenge – to help organisations build AI literacy, create a culture where questioning the model is normal, and put the right safeguards in place so humans and AI can collaborate responsibly.

5 Key Learning Outcomes from the Webinar

- Understand where AI traps come from

Participants distinguished between model/data-driven traps (bias in training data, overfitting, hallucinations) and human-driven traps (overconfidence, automation bias, herding), and learned why managing both together is essential. - Recognise the neuroscience behind decision-making

The session explained how System 1 (fast, emotional) and System 2 (slow, analytical) shape decisions with AI, and how intentionally activating System 2 helps reduce impulsive, AI-influenced mistakes. - Identify the six major AI decision traps

Attendees explored six key traps leaders face when using AI: automation bias, herding effect, overconfidence, anchoring, confirmation bias, and the illusion of objectivity—supported by real examples from trading, credit scoring and HR. - See how GenAI amplifies risks

Beyond predictive models, participants learned how GenAI’s narrative-making capability can influence sentiment and decisions, accelerating bias, herd behaviour and misjudgment if not properly governed. - Apply the 3C framework: Capability, Culture, Control

The webinar introduced a practical framework to guide responsible AI use:

• Capability – strengthen AI and data literacy, including error and uncertainty awareness.

• Culture – foster an environment where questioning AI outputs is encouraged.

• Control/Challenge – implement governance such as human-in-the-loop, stress tests, bias audits and model validation to ensure AI augments rather than replaces human judgment.

As the session wrapped up, one message stood out clearly: responsible AI leadership is no longer a technical skill – it is a strategic imperative. Whether in finance, HR, risk or product teams, leaders must be equipped not only to use AI, but to question it, challenge it and guide it with sound judgment. The discussion reinforced that the organisations that will thrive are those that invest in literacy, build cultures of healthy scepticism and put controls in place before problems surface.

For the Global Women in AI community and the wider AI in Finance Academy network, this webinar served as both a learning experience and a call to action: to champion thoughtful, evidence-based AI adoption and ensure that the future of AI is built on fairness, rigour and human-centred decision-making.